Our Reasoning about AI Reasoning isn't Reasonable

This week's Socos Academy explores the limits of our own reasoning and AI reasoning.

Mad Science Solves...

A great many brilliant people have been claiming that AI, particularly large language models (LLMs) like GTP, are engaged in reasoning. They suggest and at times outright claim that these systems are not smart like a calculator but like a person. I don’t want to oversell my confidence in my opinion about these claims, but they are horseshit. These aren’t the claims of self-skeptical scientists, and the evidence presented—amazing feats of intelligence in every imaginable domain—fails to engage with the core responsibility of science: not “is your hypothesis an explanation of the data” but “is it the best explanation.”

LLMs are the world’s more sophisticated autocompletes. They are Jeff Elman’s models from the 90s that have grown literally exponentially. That isn’t a criticism. As a scientist I am amazed and as a user I am delighted. The numerous crucial advances to get here—GPU programming, word2vec, attention, human-in-the-loop RL fine tuning, and more—have transformed the field (and not just for LLMs). But none of those capabilities or advances have produced plausible evidence of reasoning given other hypotheses.

Some of the new research I review below explore facets of the flaws in the claim that LLMs are reasoning engines. Others have looked at the claims that deep learning produces artificial intelligence that behaves like natural intelligence. One such paper found that the stimuli that optimally drove activity inside “state-of-the-art supervised and unsupervised neural network models of vision and audition were often completely unrecognizable to humans”. Their evidence strongly suggests that these “models contain idiosyncratic invariances in addition to those required by the task.” Similar findings emerge for face processing, where DNNs diverge from brains as the computation moves from lower-level sensory abstraction to higher-level behavioral relevance. For me this speaks to one of the foundational concepts in cognitive psychology: model-free vs model-based learning.

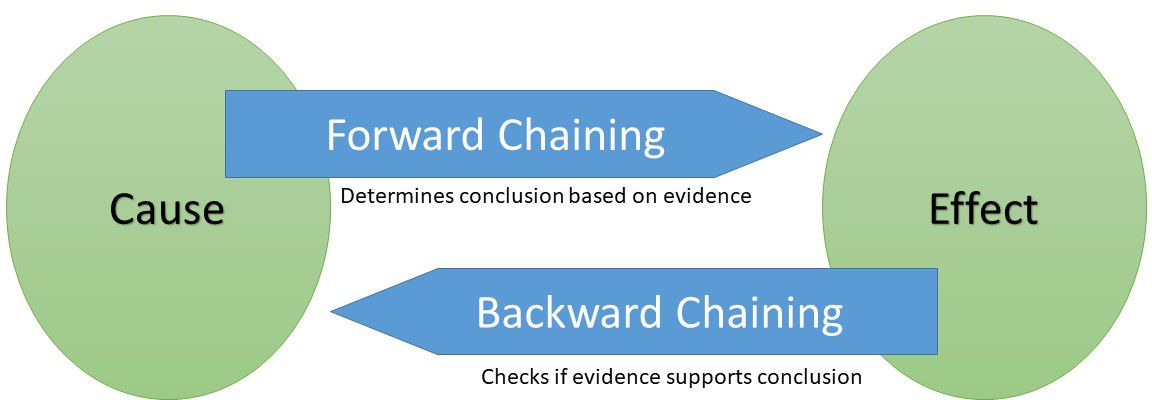

Deep learning, including LLMs, diffusion models, and much RL, engage solely in model-free learning. Just as with diverse aspects of our own cognition, they engage brute statistical modeling of patterns in the world. Model-based learning engages with the same sensory data, but rather than simply learning statistical relationships, it leverages complex explanatory (sometimes causal) relationships. Model-free is raw prediction without care for mechanism, while model-based leverages mechanism for its explanatory power. The vast majority of deep learning models today are massive correlations engines engaged solely in model-free learning. (And most counter examples, such as model-based RL, are architectured by the humans designing them.) LLM intelligence looks like us because natural intelligence also makes extensive use of model-free learning. But we do much more.

Any meaningful reasoning capability will likely require LLMs to move beyond their model-free limitations. Every example of LLM reasoning I’ve seen is better explained by them “simply” having implicitly “memorized” the answers from token regularities in its training data (which includes that very cool possibility of it modeling meta-regularites in token patterns). Human language production is highly regular and stereotypical. We’re on autopilot most of the time our lips are flapping; it’s not surprising that a model-free correlation engine captures this so well. What is amazing is that with a few trillion parameters it becomes a parrot that knows a hell of a lot about everything.

But there’s the problem: GPT knows everything…it understands nothing. We are failing to recognize the difference. This doesn’t make GPT or Bard or Claude failures. They are amazing tools, perhaps the most sophisticated ever invented. And someday they may be more than that. Today, however, we remain the only scientists—reasoning, self-skeptical explorers of the unknown—and we must do a better job of understanding our creations.

Stage & Screen

LA (November 1-5): From Beverley Hills to Malibu, I'm living the hard life in LA this week. This trip will involve billionaires and their problems, and so might not be terribly interesting to the rest of us. But I will be sipping kombucha with the X-Prize crowd.

LONDON (November 13-17): Hey UK, I'm coming your way! And it's not just London this time.

- I'm speaking at the University of Birmingham on the 14th. (link to come)

- I'll be giving a keynote for the FT's Future of AI on the 16th. Buy your ticket now!

- And I'm even flying up for the Aberdeen Tech Fest with my keynote on the 17th.

New York City (November 30 - December 7): Then in December I'm back in NYC on my endless quest to find the fabled best slice.

- I'm talking "AI, Ethics, and Investments" for RFK Human Rights on the 30th.

- (I'll be doing a remote keynote for the UK on the 4th: Developing Excellence in Medical Education.)

- We cogitating over the future of healthcare with the new ARPA-H on the 5th.

- And I'll be at the RFK Ripple of Hope Gala on the 6th!

I still have open times in both London and NYC. I would love to give a talk just for your organization on any topic: AI, neurotech, education, the Future of Creativity, the Neuroscience of Trust, The Tax on Being Different ...why I'm such a charming weirdo. If you have events, opportunities, or would be interested in hosting a dinner or other event, please reach out to my team below.

I will share with unabashed pride that multiple people this year have said, "That was the best talk I've ever attended."

P.S., London and NYC are just the places I'll already be. Fly me anywhere and I'm yours. (With some minor limitations on the definition of "anywhere".)

Research Roundup

It's circular reasoning all the way down

Hint to ML engineers and the companies that employ them: society is not interested in whether your algorithms maximize the their objective functions; we want to know if those functions actually make the world a better place. So, if you want to prove that using people's private data is a value-add to their lives then pointing out increases in the very click-through rates the algorithms were designed to maximize as proof that you’re doing good in the world is not very persuasive. This is exactly the methodology in “The Value of Personal Data in Internet Commerce: A High-Stake Field Experiment on Data Regulation Policy”.

The experiment was run on “a random subset of 555,800 customers on [site of the Chinese eCommerce giant] Alibaba”. The personal data from this subset of users was held back from recommendation algorithms, which then, predictably, recommended more generic products to users. As a result, users both browsed less and bought fewer items. Some economists might argue that increased market activity using personal data means that the use of personal data increases the welfare of both users and sellers. In keeping, the authors point out that the “negative effect” disproportionately harms “niche merchants and customers who would benefit more from E-commerce.”

The assumption that consumers’ welfare is reflected in the revealed preference of click-through rates and transaction volume is nonsense without an explicit experiment demonstrating the external validity—and crucially, the heterogeneity of context and individuals. An experiment like this can’t use the very variable their system has been designed to maximize (click-through and market volume) to argue for its welfare; otherwise, it’s circular reasoning all the way down. Instead, let’s explore how alternate measures of “welfare” produce alternative outcomes in people’s lives?

How many uses can you memorize for a candle?

A new pastime for AI researchers has been giving classic psychological experiments to large language models (LLMs), like ChatGPT. It reminds me of the careers made from rerunning every past experiment but in an fMRI. Both are interesting, but they are also frequently just fishing expeditions without a clear mechanism or hypothesis to test. In the case of LLMs, the very assumption of what the test is meant to assess goes unscrutinized. I call this the illusion of reasoning fallacy.

Take, for example, a recent assessment of creativity using the well worn alternative uses task (AUT). Participants are meant to produce as many alternate uses as possible for some everyday object: a candle, a matchbook, a rope, and so forth. The experimenter can measure both the number of ideas and also their conceptual spread (i.e., semantic distance). If you pit humans against LLMs, “on average, the AI chatbots outperformed human participants.” Human typically produce “poor-quality ideas” while the AIs are “more creative”. The one good note for humanity: “the best human ideas still matched or exceed those of the chatbots”. Damning praise.

But of course, embedded in the very nature of the experiment are certain assumptions about creativity, memory, and cognition. But is GPT or Bard truly more creative than the average human? If I tested humans with an AUT task after allowing them to train for it (e.g., memorizing previous AUT ideas and cataloging alternate uses in fiction and nonfiction) they would perform better—for some, much better. But surely we wouldn’t say that those well-studied humans are “more creative”. It seems that their metacognition and routine knowledge has changed, not their creativity (e.g., divergent thinking). Comparing humans and LLMs on AUT is very much like comparing naive vs trained humans on AUT, then drawing the conclusion that the trained ones have a richer imagination.

I recall when the (then) infamous brain teaser job interviews at Google were found to not predict job performance by their own people analytics team. At the time, I hypothesized that these assessments would have shown predictive validity if candidates did not they would be asked to come up with alternative uses for candles or how many basketballs could fit in this room. With Google’s stellar HR brand at the time, however, there were few mysteries. If you googled “how I got a job at Google” you saw blog post after blog post about cramming for the brainteasers. Testing how well people prepare for exams could have some value, but it is not an assessment of creativity, problem solving, or any of the qualities Google thought it was assessing.

The AUT for LLMs is like brain teasers for those prospective Googlers: it’s testing something, just not what we thought. It is worth better understanding the difference.

On a tangentially related note, an fascinating alternative AUT recently revealed a potentially huge insight about math ability. Students with very different levels of math achievement “were asked to design solutions to complex problems prior to receiving instruction on the targeted concepts.” Many students seemingly struggling in math were able to match the “inventive production” of their higher achieving peers. Crucially, inventive production “had a stronger association with learning from [productive failure] than pre-existing differences in math achievement”.

Here humans show something amazing, inventive production combined with productive failure drives learning by exploration. If we want AI to do the same, or understand and value what is unique to us, we must have a better understanding of the very real differences in natural vs artificial cognition.

For more on the illusion of reasoning fallacy, Technology Review has a solid review:

Books

My editor and I have been making so much progress on the new and improved How to Robot-Proof Your Kids. I'm thrilled with all of the updates and changes. It's hard to say this about my own writing but...I'm might actually be proud of it.

And in my spare time (you know, when I'm not working on Dionysus, The Human Trust, Optoceutics, and Socos Labs), I'm working on a screen play! What a fucking egomaniac. Hurray!

| Follow more of my work at | |

|---|---|

| Socos Labs | The Human Trust |

| Dionysus Health | Optoceutics |

| RFK Human Rights | GenderCool |

| Crisis Venture Studios | Inclusion Impact Index |

| Neurotech Collider Hub at UC Berkeley |