The Value of Cost

We are constantly told to optimize for speed and ease, but new research reveals that 'efficiency' is often just a fancy word for stagnation. From brain-rotted AIs to the hidden ROI of open offices, this week we explore why the only path to long-term value involves paying the price of effort.

Research Roundup

Pay It Forward

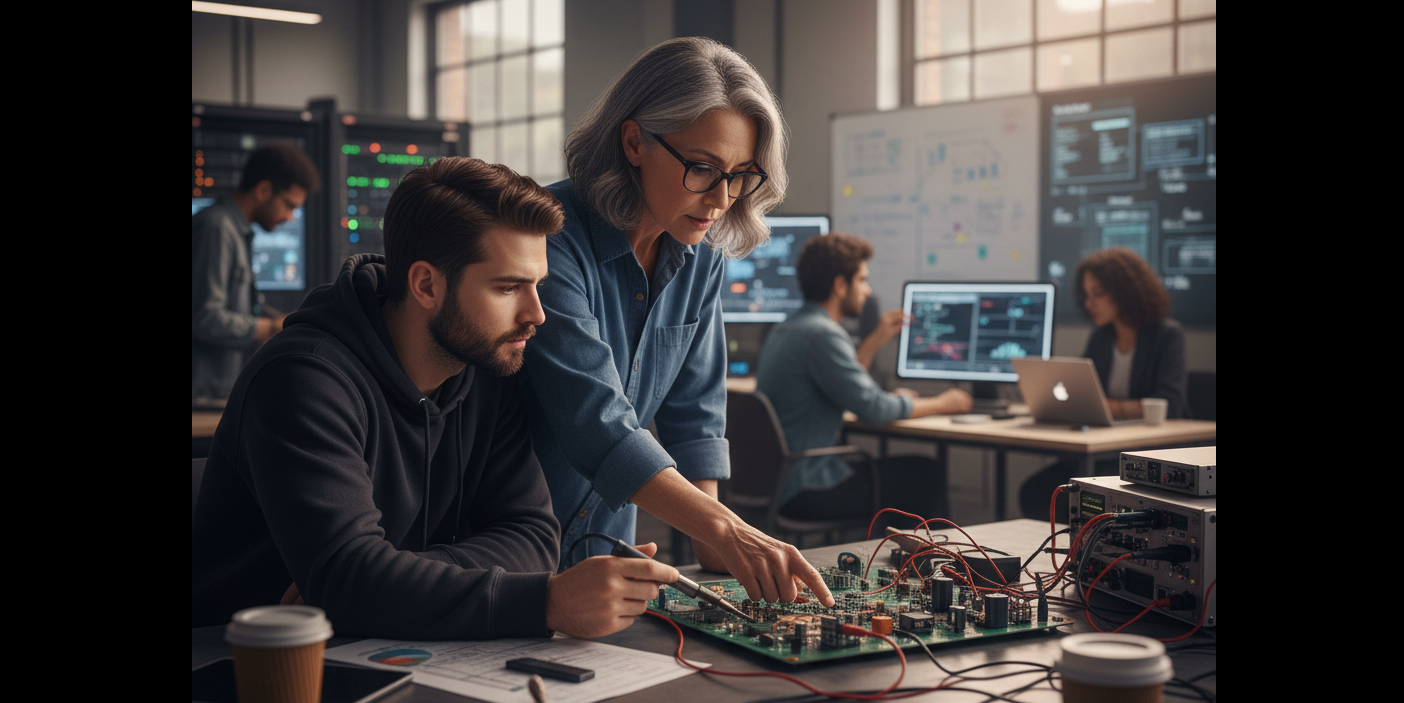

Last week I shared the new report showing that coding agents “conceptual understanding, code reading, and debugging abilities” in most developers. What about a very different phenomenon: do open offices help or hurt developers?

The answer is a matter of time scales. A new study looked at the impact of remote work in a F500 company. “Sitting together reduces engineers' programming output, particularly for senior engineers.” So proximity “dampening short-run pay raises”.

In the long run, however, “engineers working” closer together “received 22% more…feedback” and boosted their long run career trajectories.

This is the smoking gun for your argument that "work without effort leaves the worker unchanged." Remote work was "efficient"—short-term output (lines of code) went up! But that efficiency came at the cost of productive friction (mentorship, feedback, interruption). The "efficient" workers stagnated; the "inefficient" (co-located) workers grew.

The story of a good life: near-term costs, long-term gain.

Slow & Deep, Fast & Shallow

No I’m not talking about sex. This is about taxi drivers searching for passengers. Some are deep; some are shallow. And they are not equitable.

A clear tradeoff emerges in “GPS data of taxi drivers in three major cities over different time periods”: when drivers search for passengers, slower searchers find more passengers.

In other words, efficiency is the enemy of productive exploration.

The data shows that individual drivers’ strategies are stable over time, and “only about 10% of drivers adopt the most [effective] strategy, earning nearly 20% more than the average driver”. Everyone could be engaging in costly slow searches that net more money, but most stay shallow.

[Note: the paper authors actually describe the slower drives as more efficient, but to my definitions they mean more “productive”. They are putting in greater cognitive and driving effort (more turns) but producing even greater ridership.]

Your LLM’s got brain rot

𝐘𝐨𝐮𝐫 𝐋𝐋𝐌’𝐬 𝐠𝐨𝐭 𝐛𝐫𝐚𝐢𝐧 𝐫𝐨𝐭 — and it’s your fault! “Continual exposure to junk web text induces lasting cognitive decline in large language models (LLMs).”

Specifically, “continual pre-training of 4 LLMs on junk dataset[s] causes non-trivial declines…on reasoning, long-context understanding, safety, and inflating ‘dark traits’ (e.g., psychopathy, narcissism)”.

OK, ok…not surprising. But now I want you to substitute the words “engaging” and “popular” for “junk”. LLMs trained on increasing proportions of popular tweets literally became stupider.

“The gradual mixtures of junk and control datasets also yield dose-response cognition decay” with “models increasingly truncating or skipping reasoning chains”.

Guess what one of the biggest predictors of post engagement and popularity: length. People don’t like or share long tweets—not long books, "long" fucking tweets.

If you are not willing to pay the cognitive cost of thinking today, you’ll have no thoughts to think later. Just ask your LLM.

Media Mentions

The Night I Hacked My Son’s Pancreas: People often ask why I’m an optimist about AI despite the risks. The answer isn't in a research paper; it’s in my son’s blood sugar logs.

When my son was diagnosed with Type 1 Diabetes, we were plunged into a terrifying world of constant monitoring, sleepless nights, and the fear that a math error could be fatal. I didn't handle it by meditating. I handled it by doing the only thing I know how to do: I built a model.

In my upcoming book, Robot-Proof: When Machines Have All The Answers, Build Better People, I share the story of Jitterbug, the AI I hacked together to predict my son’s blood glucose levels an hour into the future. It wasn't about "optimizing" his childhood. It was about buying back the safety required to let him have a childhood.

Jitterbug taught me the core lesson of the book: The most powerful AI isn't the one that replaces us. It's the one that extends our capacity to love and care for one another when our own biology falls short.

Read the full story in Robot-Proof. Preorder your copy here.

SciFi, Fantasy, & Me

Just finished Alastair Reynolds’s 𝑯𝒂𝒍𝒄𝒚𝒐𝒏 𝒀𝒆𝒂𝒓𝒔. It’s high-concept SciFi noir on an Ark ship: mysterious femme fatales, corrupt dynasties, deep-space conspiracies, and… Yuri Gagarin? A pulpy, atmospheric ride.

Stage & Screen

- March 4, Basel: I'll be giving a keynote at the Health.Tech Global Conference 2026: "Robot-Proof: How Human Agency Drives Hybrid Intelligence & Discovery"

- March 8, LA: I'll be at UCLA talking about AI and teen mental health at the Semel Institute for Neuroscience and Human Behavior.

- March 12, Santa Barbara: Economic development on the Central Coast.

- March 14, Online: The book launch! Robot-Proof: When Machines Have All The Answers, Build Better People is will finally be inflicted on the world.

- Boston, NYC, DC, & Everywhere Along the Acela line: We're putting together a book tour for you! Stay tuned...

- Late March/Early April, UK & EU: Book Tour!

- March 30, Amsterdam: What else: AI and human I--together is better!

- plus London, Zurich, Basel, Copenhagen, and many other cities in development.

- April 14, Seattle: Ill be keynoting at the AACSB Business School Conference.

- May 12, Online: I'll be reading from Robot-Proof for the The Library Speakers Consortium.

- June, Stockholm: The Smartest Thing on the Planet: Hybrid Collective Intelligence

- October, Toronto: The Future of Work...in the Future